Danushka Bollegala¹ Norikazu Tokusue²

¹University of Liverpool, United Kingdom. ²Cognite, AS, Tokyo, Japan.

Abstract

The proposal of two-systems to model human cognition has received a wide popularity in the Artificial Intelligence (AI) community. The co-existence of an intuition-driven fast-acting System One and a reasoning-based slower System Two exclusively decouples the different cognitive tasks humans carryout on a daily basis. However, several important questions quickly surface when one attempts to implement a working prototype based on the two-system model such as (a) the distribution of external sensory inputs between the two systems, (b) feedback of the rewards for the actions for learning parameters related to each system and (c) providing justifications to decisions made by the System One using System Two, to name a few. In this opinion piece, we propose a third, meta-level system, which we refer to as the System Zero for addressing these open questions. We take an engineering perspective of Artificial General Intelligence (AGI) and propose a model of AGI based on a three-system model including Systems Zero, One and Two. Our aim in this opinion piece is to initiate a discussion in the AI community around the proposed AGI model, fuelling future research towards realising AGI.

The Two-System Model of Intelligence

The two-system model of human decision making process was popularised by the Nobel laureate, Daniel Kahneman in his New York Times best seller Thinking, fast and slow (1). Specifically, Kahneman argues the existence of two cognitive systems, which he names as the System One and System Two. System One handles unconscious decisions, such as emotions and reflexive actions that do not require significant focus or effort, whereas System Two deals with conscious, logical decisions that take time to process and often require significant cognitive effort. As an overall trend, decisions made via System Two turns out to be significantly slower compared to that by System One. The two-systems collectively explain almost all day-to-day decisions taken by humans, and therefore can be seen as a model that encodes AGI, not limited to a specific set of finite tasks. This two-system view of human intelligence has received wide attention from the AI community as a working model of human intelligence. For example, in his Posner lecture at the NeurIPS 2019 (2) Turing award winning Yoshua Bengio referred to the two-systems model to explain the requirements that must be satisfied by AI systems if they were to generalise to out-of-domain tasks.

| |

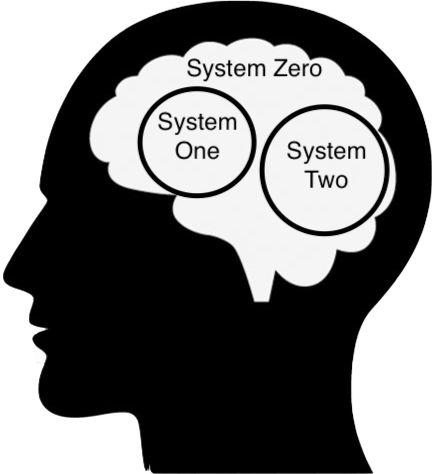

| Figure 1: The Three-System Model of Human Intelligence. System Zero encompasses Systems One and Two and acts as the sole interface between external sensors related to vision, hearing, touch, taste and smell and Systems One and Two. System Zero determines which system must handle a particular sensory input and is responsible for delivering the feedback for the purpose of learning. |

|

| Figure 1: The Three-System Model of Human Intelligence. System Zero encompasses Systems One and Two and acts as the sole interface between external sensors related to vision, hearing, touch, taste and smell and Systems One and Two. System Zero determines which system must handle a particular sensory input and is responsible for delivering the feedback for the purpose of learning. |

System Zero — The Missing Third System

Despite the simplicity of the two-systems view of human intelligence, it poses several unanswered questions, which raise concerns about its completeness as a model of intelligence. Next, we discuss these questions in detail.

Given a sensory input such as a sight captured by the eye or a sound captured by the ear etc. it is not clear who decides which system must process the given input?. For example, if you are driving a car then the sight of a pedestrian crossing the road right in front of you should ideally be sent and processed by System One because you must act quickly to save the life of that pedestrian. On the other hand, if you are solving a complex mathematical problem you would need to recall different mathematical tools and make a chain of inferences, which are typically the tasks delegated to System Two. But who decides what should be sent to System One vs. System Two?

A question that is particularly relevant when implementing an AGI model based on the two-system theory is learnability. Once an action has been taken and a reward for that action has been received from the external environment, we must feedback this to the system that was involved in making that action. This is important for that system to update its parameters such that we can maximise the future rewards and minimise incorrect decisions in the future. However, who takes care of ensuring that the correct system gets the intended feedback? More importantly, who takes the responsibility to update the parameters of the appropriate system? Without proper learning, an AGI system will continue to repeat its failures and there will be no incentive for the two systems to learn from their mistakes.

As stated above, System One operates unconsciously and its decisions do not require justifications or explanations. However, we might get asked post-action to explain our decisions even when the decisions were taken by System One, without any carefully planned set of justifications. For example, a careless driver who hits a pedestrian would try to justify his or her mistake via elaborative excuses despite not making the incorrect decision based on those excuses in the first place. Such reasoning for the decisions taken by System One would have to come from System Two, which is capable of making logical justifications. Obviously, there is no incentive or an obligation for the System Two to provide explanations for the decisions made by System One. By the mutually exclusive nature of their design, System Two does not have access to the inner decision making process of the System One and would not be able to verify the decisions made by the System One. Therefore, it remains unclear as to how can the System Two provide justifications for the decisions taken by System One?

We propose a simple fix to overcome the above-mentioned disfluencies of the two-systems theory. Specifically, we introduce a third system, which acts at a meta-level, governing Systems One and Two as shown in Figure 1. We refer to this third system as the System Zero to emphasise the fact that it operates at a meta-level to the Systems One and Two. System Zero acts as an interface between the external sensory inputs and Systems One and Two, and determines which system should handle a particular input. For example, if a human encounters a lion in the wild, then the human must act quickly without consciously thinking and acting. In this case the human might intuitively start to run when he sees the lion. For this to happen, we must let the input from the eye to be processed by System One and not System Two. The role of System Zero would be to make this life saving call. It would be too late if the System Two offers to process this input and get eaten by the lion before it can complete the inference chain. Therefore, by having the System Zero in place, we will be able to avoid such an unfortunate event pertaining to the first problem point discussed above.

Error-driven learning is a fundamental strategy for updating parameters in almost all modern machine learning algorithms. The predictions made by a model will be compared against an oracle that provides immediate or delayed (in the case of reinforcement learning) feedback in the form of discrete or continuous grades/ratings/labels. Any deviation between the predicted and actual outcomes is measured using an error (or loss) function and the parameters of the model that made those predictions are updated such that the error is minimised. However, in a decoupled two-systems agent such as a one consisting of System One and Two would not be able to know which system should take the responsibility of updating its parameters. For example, the legs (motor units) would not know whether the decision to run in the presence of a lion was made by System One or System Two. It would not matter as long as running helped us to survive. However, for the purpose of learning, we might direct the feedback to the relevant system. System Zero would act as this coordinator and will ensure that the parameters of the responsible system will get updated.

Let us next consider how having the System Zero would help us to produce accountable AGI systems. Once an AGI system has made a decision we might want to know why and how it arrived at that decision. Unlike simple decision trees or rule-based systems where explanations are readily available in the form of rules, interpretation of models learnt by modern machine learning algorithms such as deep neural networks is challenging.¹

However, we might still want or might be legally required to provide explanations/justifications for the decisions made by an AGI system irrespectively whether the decision came from System One or Two. Given the nature of System One, there might not be any elaborate reasoning behind its decisions. For example, past self experiences (or experiences shared by the others) could be the basis of a decision. System One might simply measure the similarity between the current situation and its database of past experiences/memories and make a quick decision. Revisiting our previous example, we might have seen lions killing other animals and this might induce a fear for our life and the System One might decide to ask the legs to run. On the other hand, System Two makes logically deducted decisions often following long chains of reasoning. Therefore, if we need any explanation from an AGI system our best hope would be to ask System Two instead of System One. Given the decoupled nature of System One and Two, we would have to make this communication via some other coordinator. We posit that System Zero can facilitate this requirement.

Final Remarks

In this opinion piece, we attempted to highlight some important limitations in the two-system model of intelligence and proposed a third system, System Zero, to overcome those limitations. Specifically, we discussed the limitations associated with (a) delegation of work, (b) learnability and (c) explainability. We took an engineering view-point of the issue than a behavioural science one and considered how an AGI system could be implemented based on this three-system model of intelligence. However, there is obviously a long journey towards implementing a working AGI system. We would assume our efforts to be successful if our humble opinion would provide food-for-thought for the AI practitioners in this journey towards AGI.

References

- D. Kahneman, Thinking, Fast and Slow (Mcmillan, 2012).

- Y. Bengio, From system 1 deep learning to system 2 deep learning, (2019).

- T. Mikolov, K. Chen, J. Dean, Proc. of International Conference on Learning Representations (2013).

- F. K. Dosilovic, M. Brcic, N. Hlupic, 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO) (2018).

Notes

For example, static word embedding models such as the ones produced by word2vec (3) represent the meanings of words using lower-dimensional (typically less than 1000 dimensions) vectors. However, the dimensions of these vectors are randomly initialised and it is not possible to associate a particular meaning for individual dimensions. For example, it is not the case that the first dimension represents whether it is a living thing, edible or can run fast etc. Words are projected into some lower-dimensional latent space such that semantically similar words are projected to nearby points. Therefore, the inner-product (alternatively a suitable distance measure) is the only meaningful quantity that we can use to interpret the learnt word embeddings. In this regard, static word embeddings (as well as models learnt by many other deep neural networks) are regarded as distributional representations because it is not the case that there exist individual neurones responsible for a particular prediction made by the learnt model but all the neurones in the entire network (parameters in the learnt model) are collectively responsible. Despite the best on-going efforts in the Explainable AI (4) research, it remains a significant challenge to provide explanations that can be understood by humans to the decisions made by deep neural networks.

Independently from our motivations discussed in this article, the presence of a third system that coordinates with the Systems One and Two has been mentioned by Max Tegmark in Life 3.0 for the purpose of building consciousness in AGI agents. Tegmark argues that System One to be acting unconsciously, whereas System Two to be conscious about its actions. However, without a proper working definition of consciousness, it is difficult to argue which system(s) are acting consciously and which are not. Therefore, we deliberately did not touch the topic of consciousness in AGI agents in this article.